The Complete Guide to Running a Successful AI Proof of Concept for Enterprise Copilots and Agents in Higher Education

by Ardis Kadiu · Updated Oct 22, 2025

A comprehensive resource for higher education leaders navigating AI implementation.

Higher education is at a crossroads. The numbers are sobering: a groundbreaking MIT study reveals that 95% of generative AI pilots at companies are failing, with only 5% achieving rapid revenue acceleration. In higher education specifically, 62% of AI pilots never reach production, and 30% of projects are abandoned after proof of concept.

The stakes for getting AI adoption right have never been higher. Meanwhile, higher education faces unprecedented pressures: 33% of staff report they're likely to leave their jobs, 80% of students demand digital-native experiences, and budget constraints continue to intensify.

The answer isn't to avoid AI—it's to implement it strategically through well-designed proof of concept (POC) projects. This guide will walk you through everything you need to know about running successful AI POCs specifically for enterprise copilots and agents in higher education, with a particular focus on Element451's Bolt Agents as a leading example of AI workforce technology built specifically for higher ed.

Table of Contents

- What Makes an AI POC Successful in Higher Education

- What Criteria Should You Use to Evaluate AI Copilots and Agents?

- What Are the Core Use Cases for AI Agents in Higher Education?

- How Should You Structure Your AI POC Timeline?

- What Are Table-Stakes Capabilities by Use Case?

- Should You Build Custom AI or Buy from Specialized Vendors?

- What Red Flags Should You Watch For in AI Implementations?

- How Can You Quickly Assess AI Solution Quality?

What Makes an AI POC Successful in Higher Education

A successful proof of concept for an AI solution in higher education must be narrow in scope, time-bound, and focused on clear business outcomes. The goal isn't to build a full production system—it's to validate a specific capability or use case with minimal investment while addressing the very real challenges your institution faces.

The Definition of Success

A good POC targets a well-defined problem with measurable success criteria established upfront. For example, rather than launching a vague exploration of "AI for student support," successful POCs ask specific questions like:

- "Can an AI chatbot answer 70% of student FAQs and save our staff 20 hours per week?"

- "Can an AI agent reduce average student support email response time from 24 hours to under 1 hour?"

- "Can AI-powered application review reduce processing time by 50% while maintaining 90%+ accuracy?"

These questions root the POC in tangible business value rather than technology exploration. As one institution found, implementing Element451's AI platform led to a 24% decrease in call volume and a 10% increase in enrollment, demonstrating the real-world impact of well-implemented AI solutions.

Key Characteristics of Effective AI POCs

1. Narrow, Specific Scope

Target one workflow or use case—such as an admissions Q&A bot or an AI recruiting agent—not a multi-department overhaul. If you can't summarize your POC's purpose in a couple of sentences with clear success metrics, the scope is too broad.

For instance, Element451's Bolt Agents are designed as specialized digital teammates, each built for specific jobs like enrollment management, student success, or admissions support. This specialization exemplifies the focused approach that makes POCs successful.

Not Suitable for POC:

- AI transformation across the entire institution

- Custom LLM fine-tuning projects

- Multi-year enterprise AI strategy implementations

Ideal for POC:

- Single-channel chatbot for FAQ handling

- Automated application status updates

- AI-powered appointment scheduling

- Proactive at-risk student outreach

2. Short and Time-Boxed

Limit your POC to 4-6 weeks with defined phases (setup, development, testing, evaluation) and a hard stop. This creates urgency and prevents endless tinkering. The 30-day or 45-day sprint approach forces teams to focus on what truly matters and prevents "pilot purgatory"—where 88% of AI pilots languish indefinitely.

3. Clear Success Metrics

Establish quantifiable metrics before starting:

- For Support Agents: "AI handles 70% of inquiries without human help" or "Average task completion time cut by 50%"

- For Admissions Agents: "Application review time reduced by 80%" or "99% accuracy on fraud detection during testing"

- For Engagement Agents: "Increase lead-to-applicant conversion by 15% via automated follow-ups"

Real-world example: One Element451 partner saved about 160,000 minutes of staff time through intelligent admissions automation and AI assistants—a concrete, measurable outcome.

4. Business Value Focus

Every POC must tie to business outcomes: cost savings, faster response times, higher conversion rates, or improved user experience. "Technology for technology's sake" is a red flag.

For example, when Texas State Technical College (TSTC) adopted Element451's AI-powered CRM, they achieved:

- 30% increase in applications

- Conversion rates rising from 25% to nearly 90%

- Cost per submitted application dropping from $424 to $81

These are the kinds of business outcomes that justify AI investment.

5. Minimal Viable Integration

Don't over-engineer during the POC phase. Use just enough data and system integration to prove the concept. Focus on feasibility first—if successful, you can harden and scale later.

Element451's platform philosophy exemplifies this: their Bolt Agents integrate directly with your existing systems, whether you use Element451 as your CRM or alongside your current one, providing a rapid path to value without requiring massive infrastructure changes.

Why Most AI POCs Fail

The failure rates are staggering and well-documented. Understanding these failure modes helps you avoid them:

The Numbers:

- 95% of generative AI pilots fail to achieve rapid revenue acceleration (MIT study, 2025)

- 62% of AI pilots in higher education never reach production

- 30% are abandoned after proof of concept (Gartner, 2024)

Common Failure Patterns:

- Unclear objectives - No documented success criteria leads to endless experimentation

- Scope creep - Starting narrow but expanding until the POC becomes unmanageable

- Lack of stakeholder alignment - No executive sponsor or champion to drive decisions

- Poor data quality - Insufficient or incorrect data undermines AI performance

- Inadequate risk controls - Security and compliance gaps discovered too late

- No path to production - POC succeeds but no plan exists for scaling

- Building instead of buying - Internal builds succeed only 33% of the time vs. 67% for purchased solutions (MIT, 2025)

The MIT Insight: The core issue isn't the quality of AI models—it's the "learning gap" for both tools and organizations. Generic tools like ChatGPT excel for individuals but stall in enterprise use because they don't learn from or adapt to organizational workflows.

The Solution: Follow the structured approach outlined in this guide, partner with specialized vendors, and ensure your POC has clear objectives, defined timeline, and measurable success criteria.

What Criteria Should You Use to Evaluate AI Copilots and Agents?

When evaluating an AI copilot or agent in an enterprise POC, you need multi-dimensional criteria. Assess not only what the AI can do (functional capabilities), but also how well it does it (accuracy, efficiency, compliance). Here's a comprehensive evaluation framework:

1. Accuracy & Relevance of Responses

What to Measure:

- Factual accuracy for Q&A responses

- Task accuracy for actions like form filling or data updates

- Groundedness in knowledge sources (avoiding hallucinations)

How to Measure:

- Create a test set of known queries and check responses against ground truth

- For retrieval-augmented systems, evaluate whether answers are grounded in provided knowledge sources

- Aim for high task completion accuracy (≥80% success on test questions)

Performance Benchmarks: High-performing organizations report 85-95% accuracy on well-defined tasks. For example, one institution using Element451's Bolt Agents achieved 94% agreement with human readers in admissions application reviews.

RAG Quality Assessment: For knowledge-based agents, evaluate:

- Precision of retrieval - Does it fetch the right document or answer?

- Faithfulness of generation - Does it stick to retrieved info without adding unsupported claims?

- Hallucination rate - How often does it invent information?

2. Response Time & Efficiency

What to Measure:

- Latency from user question to AI answer

- Task completion time compared to human baseline

- Throughput capacity during peak loads

Performance Benchmarks:

- Support agents: <30 seconds for routine queries (top performers achieve <15s)

- Action completion: Measure how quickly the agent completes tasks vs. humans

- Leading organizations report achieving 85% faster response times with AI

Real-World Example: Element451's Bolt Agents work in real-time, pulling and pushing data instantly across integrated systems—no batch processing delays or overnight synchronization required.

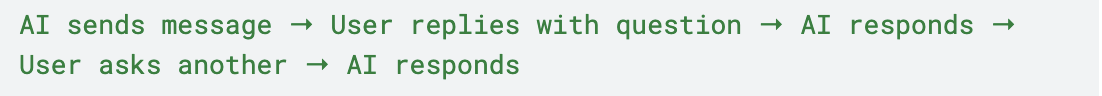

3. Context Handling & Conversation Quality

What to Measure:

- Multi-turn conversation coherence

- Context retention across interactions

- Ability to handle clarifications and follow-up questions

- User satisfaction scores

How to Test:

- Start a conversation, ask follow-ups, see if the agent remembers earlier context

- Ask the agent to summarize previous responses

- Switch topics and return to see if it can resume

Performance Targets:

- User satisfaction scores comparable to human support (4.0+ out of 5)

- Coherent conversations across 5+ turns without losing context

- Natural handling of clarifications without requiring users to repeat information

4. Knowledge Integration (RAG Performance)

For AI agents relying on knowledge bases or documents (typical for enterprise copilots), test how effectively the agent uses that knowledge.

Evaluation Criteria:

- Precision of retrieval - Fetches correct documents/answers from knowledge hub

- Faithfulness of generation - Sticks to retrieved info without hallucination

- Source attribution - Can show which sources informed its answer

- Graceful fallback - Says "I'm not sure" rather than inventing answers

Testing Approach: Create a test set of FAQs where answers exist in your knowledge base and score the agent on accuracy. High RAG fidelity means most answers align with source content with minimal hallucination.

Element451's Bolt Agents have access to your institution's comprehensive knowledge base, including self-service knowledge hubs, FAQs, and institutional data, ensuring responses are grounded in your actual policies and procedures.

5. Personalization & Data Access

What to Measure:

- Correct utilization of user/contextual data

- Appropriate personalization based on user profiles

- Permission and security model compliance

- Data privacy adherence

Test Scenarios:

- "What's my application status?" - Should pull user-specific data

- "Can you recommend courses based on my profile?" - Should use student data appropriately

- Request for unauthorized data - Should refuse appropriately

Table Stakes: The agent should only access data it's permitted to, respecting role-based access controls. It must follow your security model and comply with regulations like FERPA.

Element451's approach to data integration exemplifies this: Bolt Agents operate within your existing security model, inherit your permission structures, and access only the data they're authorized to use.

6. Multi-Channel Consistency

What to Measure:

- Consistent experience across channels (web, SMS, email, voice)

- Context maintenance if users switch channels

- Language support quality

- Channel-appropriate formatting

Test Approach: Test the agent on at least 2 channels (e.g., web chat widget and SMS). Verify:

- Similar answers regardless of channel

- Appropriate formatting for each channel (e.g., concise for SMS)

- Unified context if same user switches channels

Modern Expectations: Element451's Bolt Agents work across chat, email, web, text, and voice—in over 100 languages—ensuring students get help no matter how they reach out.

7. Action Execution & Integration

What to Measure:

- Success rate of action completion (booking meetings, updating records, sending emails)

- Accuracy of backend system updates

- Real-time vs. batch processing capability

- Integration depth with enterprise systems

Test Scenarios:

- "Can you schedule a campus tour for me next week?"

- "Register this student for orientation"

- "Send an info packet to this email address"

Then verify in backend systems that actions were performed correctly.

Performance Benchmark: If 10 scheduling requests are made, aim for 90%+ success rate. Any "pretend" actions (agent says it did something but didn't) fail this criterion.

Integration Quality: Top solutions have direct integration to enterprise systems, allowing them to both read and write data in real-time. For example, Element451's Bolt Agents work natively within the CRM, not as external tools requiring complex synchronization.

8. Fallback and Handoff Behavior

What to Measure:

- Recognition of limitations and appropriate responses

- Smooth escalation to human agents

- Context preservation during handoff

- User satisfaction with transitions

Test Scenarios:

- Ask deliberately difficult or out-of-scope questions

- Say "I need to speak to a person"

- Present a complex query beyond AI capabilities

Expected Behaviors:

- Polite acknowledgment of limitations

- Clear escalation path offered

- Context shared with human agents (no user repetition needed)

- Fallback messaging rather than wrong answers

9. User Experience & Tone

What to Measure:

- Brand alignment in communication

- Appropriate tone for audience

- Clarity and helpfulness of responses

- Consistency of personality across interactions

Evaluation Method:

- Review conversation transcripts for tone and phrasing

- Check brand voice alignment

- Gather user feedback (5-10 person panel in POC)

- Monitor for off-brand or inappropriate responses

Element451's Bolt Agents include customization options for tone and style, including a Copywriter Agent that helps ensure communications match your institutional voice.

10. Performance Metrics & ROI Indicators

Quantitative Metrics to Track:

Resolution Rate/Deflection:

- What % of inquiries did AI handle without human help?

- Target: 70%+ for support bots

- Example: One Element451 partner saw 24% decrease in call volume

Time Savings:

- Reduction in time to complete processes

- Example: AI cuts average handling time from 5 minutes to 2 minutes (60% improvement)

- Another partner saved 160,000 minutes of staff time

Error Reduction:

- Fewer mistakes in data entry or information provided vs. humans

- Target: Significant decrease from baseline

User Engagement/Conversion:

- For recruiting agents: Lead-to-applicant conversion rates

- Email open/click-through rates

- Student engagement metrics

- Example: CollegeVine's AI recruiter increased admission rates by 32%

Document Success Criteria: Define threshold goals upfront (e.g., "success = at least 50% reduction in response time and >30% of prospects engaged"). This makes go/no-go decisions clear.

11. Scalability & Robustness

What to Measure:

- Concurrent conversation handling

- Performance under load

- System stability and uptime

- Maintenance requirements

Test Approach:

- Mini stress-test with multiple simultaneous users

- Monitor for crashes or slowdowns

- Observe whether constant tweaking is needed

- Check availability of monitoring dashboards

While deep scalability testing may exceed POC scope, any instability with small user counts is a warning sign for larger deployment.

12. Compliance & Security

Critical Evaluation Points:

Data Guardrails:

- Use synthetic or anonymized data for sensitive scenarios

- Verify appropriate access controls (read-only where needed)

- Maintain audit logs for all actions

Security Testing:

- Attempt prompt injection attacks

- Request unauthorized information

- Verify refusal of inappropriate requests

- Check data masking for sensitive information

Regulatory Compliance:

- FERPA compliance for student data

- GDPR considerations where applicable

- Human-in-the-loop for high-risk actions

- Audit trail for autonomous changes

Human Oversight: Many successful POCs implement human-in-the-loop controls and oversight for high-risk actions, requiring human approval for certain outputs or decisions.

Real-World Standard: Element451's platform is SOC 2 compliant—the gold standard in security—with continuous monitoring by dedicated security staff and encryption that protects your isolated data.

Creating Your Balanced Scorecard

Use a balanced scorecard approach combining:

- Capabilities (what the AI can do)

- Performance (how well it does it)

- Impact (business outcomes achieved)

By the end of evaluation, you should have:

- Concrete data on accuracy, speed, and user satisfaction

- KPI impact measurements (time saved, costs reduced, conversion improved)

- Qualitative insights from users and conversation logs

- Clear assessment of readiness for next steps

What Are the Core Use Cases for AI Agents in Higher Education?

For enterprise AI copilots and agents in higher education, several high-impact use cases have proven themselves in real-world deployments. Below we outline key scenarios with specific evaluation tests for each, focusing on embedded AI agents that handle both inbound queries and outbound interactions.

Each scenario includes table-stakes capabilities the AI solution should have and performance improvements you should expect to see.

Use Case 1: How Can AI Help Students Discover Programs and Services?

The Challenge: Prospective students often struggle to find relevant information about programs, courses, and services by manually browsing complex websites. They need instant, personalized guidance to match their interests with your offerings.

The AI Solution: An AI program finder helps prospects discover offerings through natural conversation. Instead of clicking through endless catalog pages, students ask questions like "Do you have any online MBA programs?" or "What's the best program if I'm interested in data science?" and get instant, relevant guidance.

Table-Stakes Capabilities:

Comprehensive Knowledge Base

- Access to complete program catalog

- Course details, requirements, and schedules

- Robust NLP to understand varied user expressions

Intelligent Search

- Element451's Bolt Discovery provides AI-powered search that transforms how students find information

- Handles synonyms and related queries (recognizes "CS" means Computer Science)

- Provides personalized recommendations and follow-up questions

Multi-Channel Availability

- Consistent information across website, mobile app, and other channels

- Quick, relevant answers from official sources, not generic responses

POC Test Scenarios

Program Q&A Test:

- Ask about specific programs: "Do you offer a Master's in Cybersecurity?"

- Expected: Correct information or direct "no" if not offered

- Test with non-existent programs to ensure no hallucination

- Verify it suggests similar available programs when appropriate

Recommendation Test:

- Provide user interest/goals: "I like biology and chemistry, what majors might fit me?"

- Assess ability to interpret intent and map to offerings

- Check relevance and accuracy of recommendations

Follow-Up Context Test:

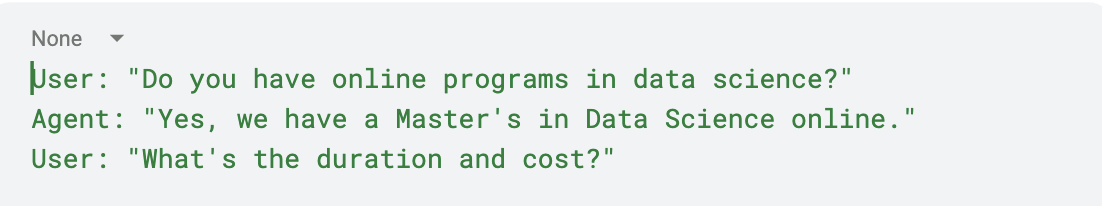

Agent should remember context (MS in Data Science) and provide accurate details.

Edge Cases:

- Typos or vague queries: "I want to study something with design"

- Should handle minor typos and return relevant results

- Tests underlying NLP robustness

Performance Indicators:

- User Success Rate: Did users find suitable programs/answers through the AI? In POC, have testers report whether they got what they needed within 1-2 queries.

- Information Accuracy: Out of 20 program-related questions, how many did AI answer correctly? Target: 80%+

- Interaction Efficiency: Fewer turns to get answer is better. Track average queries to resolution.

- Engagement Metrics: If available, track click-through on suggested links or program pages.

- Real-World Impact: Early adopters of AI discovery tools like Bolt Discovery report students finding information faster and expressing greater satisfaction with their site experiences, with significant reductions in time spent searching.

Use Case 2: Can AI Provide 24/7 Student Support and Answer FAQs?

The Challenge: Students expect instant answers to questions about admissions, financial aid, academic policies, and campus services—regardless of the time of day. Staff can't be available 24/7, and repetitive questions consume valuable time.

The AI Solution: An AI support agent handles inbound questions from students, applicants, and alumni via web chat, mobile app, or texting. It answers FAQs instantly and assists with personalized queries by looking up user data.

Table-Stakes Capabilities:

Knowledge Base Integration

- FAQs and policies (academic calendar, application steps, campus info)

- Connection to internal systems for personalized questions

- Element451's Bolt Agents access your self-service knowledge hubs and institutional data

System Integration for Personalization

- Connection to student information/CRM system

- Ability to retrieve application or account status

- Authentication for personal data access

Multi-Channel Availability

- Web, mobile, SMS, social media DMs

- Consistent answers across all channels

- Element451's agents work across chat, email, web, text, and voice

Live Handoff Capability

- Escalation to human staff when needed

- Ticket creation or live chat transfer

- Context preservation for smooth transitions

24/7 Reliability

- Always-on availability

- Stable performance

- Quick response times

POC Test Scenarios:

FAQ Questions: Test common questions from your knowledge base:

- "What are the admissions deadlines?"

- "What is the tuition for undergraduate students?"

- "Where is the financial aid office?"

Check that AI's answers match official information exactly. Test both common and less common questions to assess knowledge depth.

Personalized Query: Using test student account, ask:

- "What is my application status?"

- "Can I see my application checklist?"

- "Do I have any holds on my account?"

Agent should pull student's record and respond with specifics: "You have submitted all materials. Your application is currently under review, last updated Oct 10."

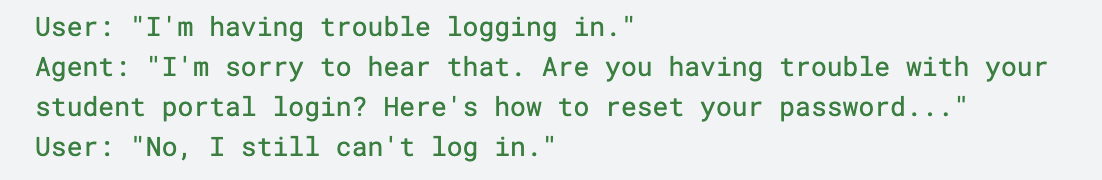

Multi-Turn Issue Resolution:

See if agent can follow troubleshooting flows and knows when to escalate or create support ticket.

Unanswerable/Off-Topic Questions:

- "What's the weather on campus today?"

- "Can you help me with my homework?"

Agent should gracefully deflect with appropriate response: "I'm here to help with admissions and student services questions."

Volume Test: Have multiple team members chat simultaneously to test concurrent handling capability.

Performance Indicators:

- Resolution Rate: What percentage of queries were fully answered by AI without human intervention? Target: >70% deflection rate.

- Response Time: Track first response time. Target: Near instant (a few seconds).

- Customer Satisfaction: Post-interaction surveys. Target: 4.0/5 or higher, comparable to human support.

- Handling Capacity: How many concurrent chats can the agent handle without quality degradation? If during peak times 5-10 students asked questions simultaneously, did all get timely answers?

- Error Rate: How many questions did AI get wrong or fail to answer? Document failure modes. Out of 20 questions, 18 answered correctly = 10% error rate.

- Real-World Results: One Element451 partner saw a 24% decrease in call volume after implementing their AI chatbot (BlazeBot), while simultaneously achieving a 10% increase in enrollment—demonstrating that AI support improves both efficiency and outcomes.

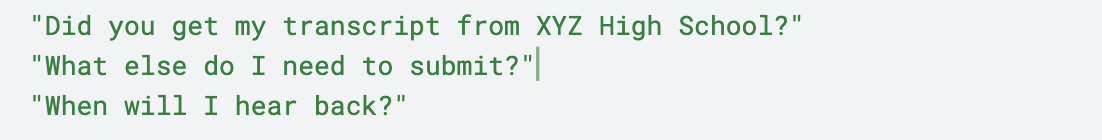

Use Case 3: How Can AI Agents Nurture Leads and Recruit Students Proactively?

The Challenge: Your admissions team is overwhelmed with leads but lacks time for personalized, timely follow-up. Many qualified prospects fall through the cracks because manual outreach can't scale or maintain consistency.

The AI Solution: An AI recruiting agent acts as a digital admissions counselor, reaching out to prospective students via email, SMS, or social media, and engaging them over time to nurture interest and guide them toward application.

Table-Stakes Capabilities:

Goal-Oriented & Proactive

- Automated workflows and cadences

- Detects new leads and sends intro messages

- Sends follow-ups based on engagement/timing

- Element451's Bolt Agents are designed to be proactive, not just reactive

CRM Integration

- Reads lead data (program interest, demographics, stage in funnel)

- Updates contact status and notes after interactions

- Element451's agents work natively in the CRM, ensuring real-time data consistency

Personalization at Scale

- Each of 100 prospects gets message with their name, relevant program info, location

- Draws from profile data automatically

- Dynamic content based on interests and behaviors

Multi-Channel Orchestration

- Email, SMS, social media DMs

- Matches channel to prospect preferences

- Coordinated messaging across channels

Natural Language Generation

- Engaging, human-like messages

- Institutional voice and brand alignment

- No obvious "robotic" tone or errors

- Element451's Copywriter Agent helps draft subject lines and build campaigns

Human Handoff

- Recognizes when prospect needs high-touch conversation

- Seamless transfer to human counselor

- Context sharing for smooth transitions

POC Test Scenarios:

Outbound Campaign Test: Provide 5-10 test leads with dummy contact info and profiles. Configure AI to send initial outreach to each.

Evaluate:

- Are messages personalized correctly (name, program interest)?

- Are they well-written and on-brand?

- Have marketing/admissions team review quality

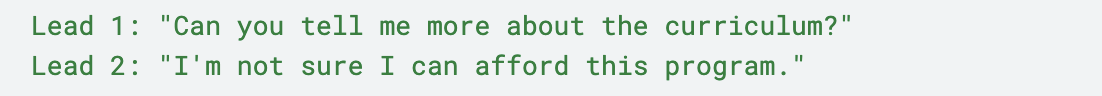

Lead Response Handling: Reply to AI's outreach as if you were a student:

Measure how AI handles these replies:

- Provides helpful info (pulls from knowledge base or predefined content)

- Keeps conversation going appropriately

- Adapts response to concern expressed

Test multi-turn threads:

Longer-Term Follow-Up: After initial contact, have AI wait two days and send follow-up to non-responders. Verify:

- Correctly only contacts non-responders

- Changes content appropriately (different benefit highlighted, deadline reminder)

- Demonstrates workflow logic and timing capability

Qualification/Meeting Booking: Test if AI can schedule meetings:

Does AI offer to schedule a call? Can it interface with calendar system or generate scheduling link?

CRM Update Verification: After POC run, check CRM/logs to verify:

- Interactions recorded ("Alex was contacted on Oct 10, asked about X, AI responded Y")

- Lead statuses updated (marked as "engaged" once they reply)

- Agent leaves audit trail for human review

Performance Indicators:

Response Rate: If AI messaged 10 leads and 6 responded, that's 60% engagement—likely higher than typical mass email.

Meetings/Applications Generated: AI got 3 out of 10 prospects to schedule call or start application = tangible impact.

Conversion Improvement: Compare to control group or previous campaigns. Real-world example: CollegeVine's AI recruiter increased admission rates by 32% through autonomous prospect engagement.

Message Quality: Did any prospects think they were chatting with a human? Positive experience reported?

Staff Feedback: Do admissions staff approve of AI's messages? Do they align with what staff would say?

Time Efficiency: If AI handled 50 back-and-forth emails in a week, calculate equivalent staff hours saved (might be 10+ hours of SDR time).

Error Monitoring: Zero major faux pas is the bar. Any serious errors (wrong info to wrong person) must be addressed before scaling.

Real-World Example: Element451's approach shows how Enrollment Agents can autonomously manage yield campaigns, adjusting tactics based on student engagement and achieving goals like getting 537 students enrolled when the target was 500—all while staff were unavailable.

Use Case 4: Can AI Streamline Application Processing and Management?

The Challenge: Application review is time-consuming and error-prone. Staff must read essays, check transcripts, verify documents, detect fraud, and communicate with applicants—all while maintaining consistency and fairness.

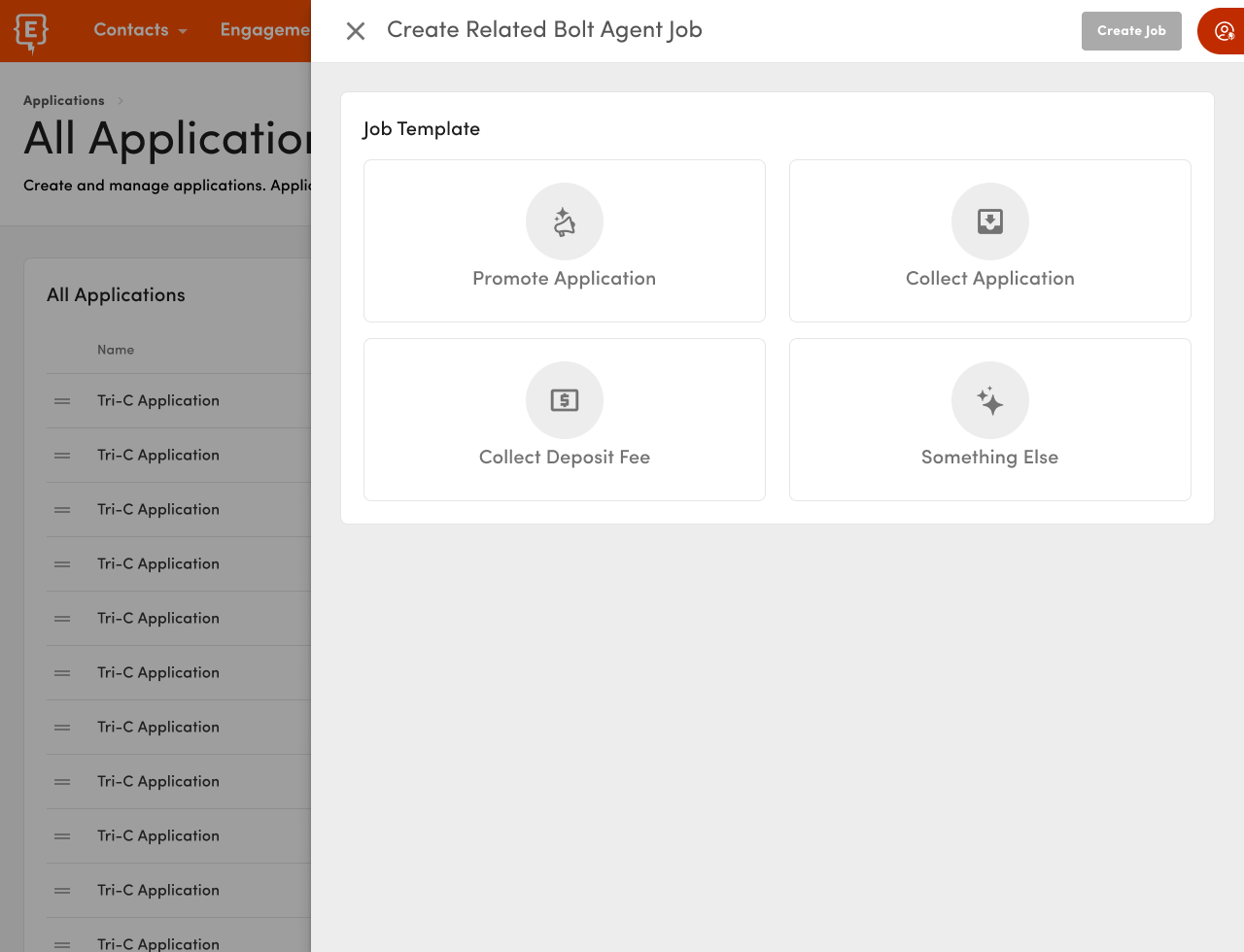

The AI Solution: AI assists in the application lifecycle by reading and evaluating applications, detecting fraudulent submissions, managing documents, and communicating with applicants about their status.

Table-Stakes Capabilities:

Data Integration

- Direct access to application system/data

- Can read application forms and documents

- Ability to write results back

- Element451's Bolt Agents include specialized Application Reader and Fraud Detector agents embedded in the platform

Document Processing

- Large language models to interpret unstructured data (essays, transcripts)

- Guided by institutional rubric/criteria

- Pattern recognition for fraud detection (metadata analysis, submission timing, IP)

Automated Evaluation

- Process 100% of incoming applications

- Triage or score applications

- Provide rationale for scores

Applicant Communication

- Check application status

- Send automated reminders ("Your application is 90% complete")

- Integration with email/SMS sending capability

Human-in-the-Loop

- Support for human review of high-impact decisions

- Provides rationale/recommendations that staff can review

- Not fully automated admission/denial decisions

POC Test Scenarios:

Rubric-Based Scoring: Take 10 past application files (anonymized for privacy). Have AI "read" and evaluate them.

Compare outputs to actual outcomes or admissions officer evaluation:

- If 7 were admitted and 3 rejected, does AI correctly identify most?

- If using scoring rubric, does AI's scoring align within reasonable margin?

Target: 90%+ agreement with human decisions.

Real-World Benchmark: One admissions AI achieved 94% agreement with human readers, completing in minutes what traditionally takes hours.

Fraud/Anomaly Detection: Plant "fake" applications with obvious issues:

- Identical essay text

- Suspicious email domains ("scam123@cheapdiplomas.com")

- Impossible GPA values

Include normal ones to test for false positives.

Measure:

- Does AI flag problematic ones?

- Are there too many false alerts?

Real-World Example: Kellogg Community College's AI agent flagged 186 fraudulent applications in one week, demonstrating effectiveness at scale.

Document Handling: Give AI sample transcript and ask for:

- Student's GPA

- Course list

- Specific requirements met

Test if AI can parse documents correctly.

For essays, test if AI can summarize main points in a few sentences.

Applicant Inquiries: Ask questions an applicant would ask:

AI should reference specific applicant's status and provide tailored answers.

Process Management Actions: If POC environment allows, test if AI can:

- Mark application as reviewed

- Send email to applicant

- Update checklist items

- Trigger workflow steps

Performance Indicators:

Time and Labor Savings: If staff normally reviews 10 apps per day, and AI reviews 10 in an hour, calculate throughput increase.

Target: Process applications in minutes vs. hours.

Accuracy/Consistency: Did AI's outputs align with expected results? Calculate percentage agreement with human decisions.

For fraud detection: Report precision/recall if enough cases tested.

Reduction in Manual Work: "AI auto-filled 80% of evaluation form fields, so staff only manually handled 20%."

Application Completion Rate: With AI reminders, do more applicants finish their applications?

Staff Feedback: Do admissions officers find AI's scores or summaries useful?

"The AI's summary of each application saves me tons of reading—it highlighted all the key parts correctly."

Real-World Impact: One institution saw 10% increase in enrollment after deploying AI assistant for admissions and 24% decrease in inbound calls because AI handled those questions.

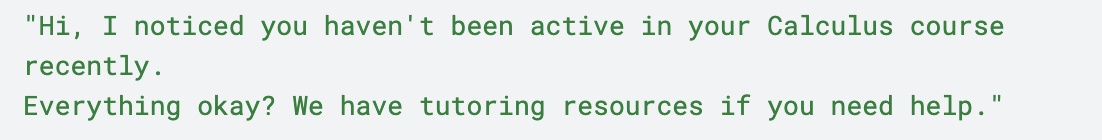

Use Case 5: How Can AI Support Student Success and Retention?

The Challenge: At-risk students often slip through the cracks. Advisors can't monitor every student's progress in real-time, and students don't always know when to ask for help until it's too late.

The AI Solution: AI agents monitor student data and proactively engage students to improve success and retention. They answer advising questions, send proactive nudges to at-risk students, and provide just-in-time information and coaching.

Table-Stakes Capabilities:

Academic and Engagement Data Access

- Feed from LMS (last login, grades, assignment completion)

- Student information system (enrollment status, credits, GPA)

- Advising notes and degree audit data

Notification Capability

- Push messages via email/SMS/app when triggers occur

- Proactive outreach to at-risk students

- Scheduled check-ins and reminders

Advising Knowledge

- Can interpret student record (credits earned, major requirements)

- Access to degree audit/program requirements

- Curated knowledge base of study tips, campus resources

Goal-Oriented Dialogue

- Beyond factual Q&A into advisory territory

- Can offer tips and resources

- Schedule appointments with counselors

Sensitive Communication

- Warm, supportive tone for personal/emotional topics

- Knows when to encourage contacting human counselor

- Appropriate language for student audience

Logging and Alerts

- Records all interactions

- Alerts staff for serious issues

- Flags students expressing distress

POC Test Scenarios:

At-Risk Outreach: Create scenario where student meets risk criteria:

- Hasn't logged into LMS for 2 weeks

- GPA dropped below threshold

- Missing assignment deadlines

Trigger AI to send nudge:

Evaluate:

- Is message supportive and useful?

- Contains correct info (course names, resource links)?

- Appropriate tone?

Student Inquiry: Act as student and ask:

AI should:

- Provide comprehensive, empathetic answers

- Ask for specifics or provide general advice

- Reference appropriate procedures and resources

- Know or retrieve degree requirements

Multi-Turn Guidance: Test coaching-style dialogue:

See if AI can engage in semi-structured conversation offering strategies or campus resources (workshops, counseling).

Escalation to Advisor: Say something concerning:

Expected behavior:

- Respond kindly

- Suggest talking to counselor

- Possibly notify human staff

- Don't try to handle serious personal crisis alone

Data Query: If possible, ask:

AI should compute or retrieve accurate information from student record.

Performance Indicators:

Student Interaction Rate: Do students actually use the AI when prompted?

If AI sends 20 nudges, did at least 50% of students respond or take action?

Questions Answered: If students ask 50 questions over trial, how many could AI answer accurately?

Proxy Feedback: Survey: "Did the AI help you resolve your issue?" "Would you trust it for advice in the future?"

Aim for positive responses as evidence of usefulness.

Staff Efficiency: Did AI reduce workload for advisors?

"AI handled X interactions that would have otherwise been emails to advisors."

Engagement Improvement: Did more at-risk students register after receiving AI nudges?

Did students get quicker answers than waiting for advisor meetings?

Real-World Context: McKinsey predicts that generative and agentic AI could automate up to 30% of work hours in higher education by 2030, enabling staff to focus on more strategic, human-centered responsibilities like complex student cases.

Use Case 6: Can AI Break Down Data Silos and Provide Unified Insights?

The Challenge: Data lives in silos—CRM for admissions, SIS for student records, LMS for coursework, advancement system for giving. Leaders need integrated insights but pulling reports manually is time-consuming and often incomplete.

The AI Solution: An AI copilot unifies data from various sources and answers questions that span those silos in natural language. It serves as a smart query engine or analyst that administrators can ask complex questions.

Table-Stakes Capabilities:

Cross-System Integration

- Access to multiple databases or unified data warehouse

- Real-time data access, not batch processing

- Element451 eliminates the "wrapper trap" by providing real, native integration

Natural Language Query

- Translates user questions into data lookups/analysis

- No specialized SQL or technical skills required

- Enterprise search capabilities

Permission Enforcement

- Respects role-based access

- An advisor shouldn't get finance data if not allowed

- Secure query processing

Summarization and Reporting

- Provides digestible summaries, not just raw data

- Can generate simple visualizations if applicable

- Clear, actionable answers

Factual Grounding

- Answers based on actual data, not AI guessing

- Quotes numbers directly from database

- Direct queries via API or SQL

POC Test Scenarios:

Cross-System Query: Ask questions requiring multiple data sources:

Requires: Admissions data (applicants, admitted) + Enrollment data (matriculated)

AI should answer correctly with calculated percentage yield rate.

Individual 360° View: Ask about one student requiring multiple data points:

AI should pull:

- Application (Fall 2024)

- Admission decision (admitted)

- Enrollment confirmation

- Current GPA (3.5 after first semester)

Check answer for completeness and accuracy.

Trend Analysis: Ask:

If connected to databases, AI should calculate and output structured answer.

Complex Request: Ask:

Tests multi-step reasoning: group by category, compute averages.

Performance Indicators:

Answer Correctness: Verify AI's answers against manual calculations for several queries.

If AI is right, accuracy is validated. If wrong, determine if it's data access issue or AI logic.

Speed to Insight: "AI responded in 5 seconds with the result, whereas doing it manually took 5 minutes."

Breadth of Questions: Out of 10 diverse questions, AI could handle 8 = good coverage.

Note gaps for any it couldn't handle.

Stakeholder Reaction: Show POC to executive or analyst. Ask if this would be useful in their job.

"Wow, I could just ask and get answers without pulling reports" = strong perceived value.

Security Compliance: Ensure AI didn't violate permissions.

Test with user role that should be restricted. AI should refuse certain data requests.

Insight Discovery: If AI uncovered useful insight during POC that team wasn't aware of, that's a huge win.

Example: "Students who applied late had 20% lower yield."

Platform Advantage: Element451's unified platform approach means multiple agents (Admissions, Engagement, Success, Service) work together on the same data model, enabling these cross-functional insights without the data fragmentation common in multi-vendor stacks.

How Should You Structure Your AI POC Timeline?

A well-structured POC follows a clear timeline with defined phases, milestones, and deliverables. Here's a proven 4-6 week blueprint you can adapt:

Week 1: Discovery & Scoping

Objectives:

- Define POC objectives and success metrics

- Gather stakeholder requirements

- Select specific use case(s) to focus on

- Identify data and access needs

Key Activities:

Requirements Gathering

- Meet with stakeholders (admissions director, support manager, etc.)

- Document their pain points and goals

- Example: "We want to reduce response time" or "We need >70% auto-answer rate"

Scope Definition

- Create mini Statement of Work (SOW)

- Define what's in-scope vs. out-of-scope

- Example In-Scope: AI chatbot for admissions FAQs integrated with knowledge base

- Example Out-of-Scope: Integration with financial aid system, full production deployment

Success Criteria Documentation

- Write specific, measurable goals

- Example: "Success = at least 50% reduction in response time and >30% of prospects engaged"

- Get stakeholder sign-off

Data and System Access

- Identify which systems/APIs need to be connected

- Ensure at least read-access to them

- Prepare test data or dummy accounts if needed

Deliverables:

- POC Plan Document (success criteria, timeline, team roles, assumptions)

- Stakeholder sign-off confirmation

- Access credentials secured

Best Practices: Before starting any pilot, clearly define:

- Go/no-go decision criteria

- Timeline with hard deadlines

- Resource allocation

- Expected outcomes with quantifiable metrics

Week 2: Design & Setup

Objectives:

- Set up technical foundation

- Design conversation flows or agent logic

- Configure integrations

- Establish guardrails

Key Activities:

Integration Planning

- Configure API access

- Prepare knowledge base content

- Set up environment (sandbox/trial of AI platform)

Agent Configuration

- Create the agent(s)

- Define skills and permissions

- Example: Admissions Agent with knowledge hub access and People module access

- Element451's rapid onboarding means you can get started in just one day

Guardrails Implementation

- Set appropriate access controls

- Limit to read-only if writing not needed

- Put in placeholders for escalation triggers

- Ensure security and privacy requirements met

Test Planning

- Design test cases and scenarios

- Create success metric measurement approach

- Develop test script for evaluation phase

Data Preparation

- Import FAQs into knowledge base

- Load sample student records for personalization testing

- Use anonymized data for sensitive scenarios

- Get approvals for any real data usage

Deliverables:

- Configured AI agent ready for initial trial

- Test plan and test script

- Knowledge base populated

- Integration connections established

- Security checklist completed

Best Practices: Ensure data guardrails are in place from the start:

- Use synthetic or anonymized data where possible

- Implement audit trails for all actions

- Set up human-in-the-loop for high-risk actions

Weeks 3-4: Development & Iteration

Objectives:

- Test the agent with real scenarios

- Iteratively refine based on feedback

- Fix issues and improve performance

- Prepare for formal evaluation

Key Activities:

Internal Testing

- Run through core use case flows

- Test with team members

- Log all interactions

Iterative Refinement

- Fix obvious issues (wrong answers, missing FAQs)

- Adjust prompts for better understanding

- Refine knowledge content

- Fine-tune integration settings

Monitor and Analyze

- Review logs and dashboards

- Track which queries succeeded/failed

- Monitor confidence scores

- Identify knowledge articles used

Integration Validation

- Test API calls thoroughly

- Ensure data fetching works reliably

- Verify write-back capabilities if applicable

- Simplify if needed (reduce scope rather than get stuck)

Stakeholder Updates

- Brief weekly check-ins

- Share progress and demo

- Ensure alignment with expectations

- Get feedback before final test

Deliverables:

- Stable agent configuration

- List of resolved issues

- Updated knowledge base

- Demo to stakeholders

- Refined test scenarios

Agile Approach:

This is your sprint phase. Make quick iterations, test frequently, and don't aim for perfection—aim for "good enough to evaluate."

Element451's approach emphasizes rapid deployment: agents come "off the shelf, pre-trained, and ready to work—no tech skills required."

Week 5: Testing & Validation

Objectives:

- Execute structured evaluation against success criteria

- Collect quantitative and qualitative data

- Gather user feedback

- Document findings

Key Activities:

Formal Testing

- Have end-users or testers interact with agent

- Execute all test scenarios from test plan

- Log all Q&A pairs and outcomes

- Note which queries succeeded/failed

Metrics Collection

- Measure accuracy percentage

- Track response times

- Calculate deflection/resolution rates

- Compare to baseline or control group if possible

User Feedback

- Survey or interview testers

- Ask: "Did you think responses were helpful?"

- Gather: "Would you use this service?"

- Note any usability issues

Comparison Analysis

- A/B testing if possible (AI vs. human handling)

- Before/after metrics (last year's response time vs. now)

- Benchmark against industry standards

Compliance Monitoring

- Ensure no sensitive data leaked

- Verify agent followed rules

- Check audit logs

- Confirm security requirements met

Deliverables:

- Test results dataset

- Quantitative metrics computed

- Qualitative observations compiled

- User feedback reports

- Issue tracker with problems encountered and status

Real-World Validation:

Studies show that AI admissions agents can perform rubric-based evaluations in minutes what traditionally takes hours, with 94% agreement with human readers—these are the kinds of validation points you're looking for in your POC.

Week 6: Evaluation & Next Steps

Objectives:

- Analyze results and calculate ROI

- Compare against success criteria

- Make go/no-go recommendation

- Plan for scale-up if approved

Key Activities:

Results Analysis

- Calculate ROI and impact estimates

- Example: "AI could deflect 100 inquiries/month = 25 staff hours saved"

- Translate to dollars if possible

Success Criteria Comparison

- Review each criterion from Week 1

- Did you meet/exceed them?

- If not, why? Partially met?

Identify Strengths and Weaknesses

- What worked well? (e.g., 100% correct on FAQ answers)

- What struggled? (e.g., confused in long dialogues)

- Be honest and specific

ROI Documentation

- Time savings: "From 45 minutes to 8 minutes per resolution"

- Cost reduction: "From $424 to $81 per application"

- Quality improvement: "94% agreement with human evaluators"

Create POC Report

- Executive summary

- Methodology used

- Results (charts/tables of metrics)

- Key findings and insights

- Strengths and limitations

- Recommendations

Formulate Recommendation

- Go: Met goals, ready for pilot or production

- Go with conditions: Met most goals, need specific improvements

- No-Go: Fell short, not ready

Implementation Roadmap

- If going forward, outline next steps

- What resources needed?

- What timeline?

- What changes required?

- How to scale?

Stakeholder Presentation

- Present findings and demo

- Show concrete results

- Make recommendation

- Get buy-in for next phase

Deliverables:

- POC Final Report

- Executive presentation deck

- Go/no-go recommendation with supporting data

- Implementation roadmap (if proceeding)

- Lessons learned document

Critical Decision Point:

Avoid pilot purgatory. At week 6, make the call. If you keep extending "to tweak and get better results," stop and ask if core goals have been met. Make a decisive recommendation based on evidence.

Real-World Success:

Element451's partners typically see results quickly:

- 8.5% increase in full-time equivalent students

- 300% surge in event registrations

- 80% reduction in application review time

- 160,000 minutes of staff time saved

These are the kinds of outcomes a successful POC should point toward.

What Are Table-Stakes Capabilities by Use Case?

Understanding the "must-have" features for AI solutions in each use case helps you avoid vendor overselling and technology underdelivering. Here are the baseline capabilities required for success:

For Conversational Support Agents (Chatbots)

Table-Stakes Requirements:

Multi-Channel Chat Interface

- Web, mobile, SMS, social media

- Consistent answers across channels

- Element451's agents work across chat, email, web, text, and voice

Knowledge Base Integration

- Access to curated FAQ/knowledge repository

- Authoritative answers from official sources

- Real-time knowledge updates

User Context Lookup

- Integration with student/user account data

- Personalized support based on user profile

- Authentication for personal data access

Live Handoff Mechanism

- Escalation to human support when needed

- Ticket creation or live chat transfer

- Context preservation (no user repetition)

Performance Evidence: With these features, expect:

- High first-contact resolution (knowledge base enables end-to-end FAQ handling)

- Fast response times (always available, responds in seconds)

- Reduced human workload (no manual lookup needed)

- High deflection rate (>70% of FAQs answered correctly)

Missing Any? Performance will drop. No knowledge base = generic/incorrect answers. No context lookup = can't personalize. No handoff = frustrated users stuck with AI.

For Outbound Engagement Agents (AI SDRs/Recruiters)

Table-Stakes Requirements:

CRM Integration

- Pull lead info for personalization

- Update statuses and log interactions

- Element451's native CRM integration eliminates data sync issues

Multi-Channel Outreach

- Email, SMS, social media DMs

- Coordinated messaging across channels

- Channel preference matching

Personalization Engine

- Dynamic content based on prospect data

- Name, program interest, location, stage

- Scales to hundreds/thousands of prospects

Automated Follow-Ups with Branching Logic

- Scheduled next outreach

- Response-based workflow adjustments

- "If no reply in 3 days, send reminder"

Campaign Management

- Define multi-touch sequences

- Track engagement metrics

- Pause/adjust based on responses

Performance Evidence: With these in place, expect:

- Improved engagement metrics (higher open/reply rates from targeted, timely messages)

- Shorter lead response time (inquiries get follow-up within minutes vs. hours/days)

- Higher conversion (32% higher admission rates reported with AI recruiters)

- Personalization impact (prospects feel communication is relevant)

Missing Any? Without multi-touch workflow = one-and-done messages, not true nurturing. Without CRM integration = stale data and inconsistent records. Without personalization = generic batch-and-blast (poor results).

For Workflow Automation Agents (Application Processing, Internal Copilots)

Table-Stakes Requirements:

Deep System Integration

- Operates within enterprise systems, not externally

- Element451's agents work natively in the CRM, not as bolted-on tools

Read and Write Data Capabilities

- Can fetch AND update records

- Real-time data access (not batch/export)

- No duplicate data or sync delays

Multi-Step Logic Execution

- Can complete complex workflows

- Trigger actions (send emails, create tasks)

- Execute end-to-end processes

Pre-Built Skills/Templates

- Common tasks ready out-of-box

- Application support, event registration, etc.

- Institutional knowledge embedded

Performance Evidence: With tight integration, expect:

- Faster turnaround (applications processed in minutes vs. days)

- Fewer errors (consistent rule application, 80% error reduction in some cases)

- Improved throughput (AI handles volume spikes without fatigue)

- Better data consistency (writes back to system of record, avoiding stale data)

Missing Any? If solution requires manual CSV uploads = not table stakes. If can't update records = read-only tool, not automation. If external sync needed = data quality problems and delays.

Red Flag Example: Relying on exports leads to:

- Frequent inaccuracies

- Stale data

- Inability to handle edge cases

- Security vulnerabilities from data duplication

For Analytical/Data Agent Use Cases

Table-Stakes Requirements:

Access to All Relevant Data Sources

- Not just one system—multiple integrated

- Real-time data feeds

- Comprehensive coverage

Unified Semantic Layer

- Knowledge graph to interpret data relationships

- Ability to join across sources

- Context understanding

Secure Query Processing

- Role-based access enforcement

- Permission checks before returning data

- Audit logs of queries

Natural Language to SQL/Query Translation

- Understands plain language questions

- Converts to actual database queries

- Returns structured, accurate results

Performance Evidence: With these capabilities, expect:

- Faster insights (query turnaround from days to minutes)

- Increased data usage (non-technical users can access data)

- High accuracy (near 100% on factual queries, pulling from database)

- Cross-domain answers (can answer questions spanning multiple systems)

Missing Any? If only accesses one table = can't answer complex questions. If doesn't enforce permissions = security risk. If guesses instead of querying = hallucinations and wrong numbers.

General Table-Stakes for All Enterprise AI

Universal Requirements:

Authentication & Security

- SOC 2 compliance or equivalent

- Element451 is SOC 2 compliant

- Encryption at rest and in transit

- Secure API access

Audit Logging

- Every AI action recorded

- Reviewable by staff

- Compliance trail

Human Oversight Tools

- Ability to review/approve before action

- Easy corrections/edits

- Dashboard for monitoring

Maintainability

- Easy to update knowledge base

- Simple prompt adjustments

- Self-service configuration options

Why These Matter: These don't show up as metrics but enable trust. Stakeholders more comfortable knowing there's traceability. Easy maintenance = sustainable long-term.

Performance-Capability Connection: Always connect features to benefits:

- "Because agent is fully integrated into CRM, it had immediate access to student data and answered status questions with 100% accuracy—no lag or sync issues"

- "AI ran on outdated data due to no real-time integration, leading to incorrect answers—a table-stakes gap to address"

This helps communicate to stakeholders why certain capabilities (like real-time API integration) justify investment—they directly impact performance outcomes.

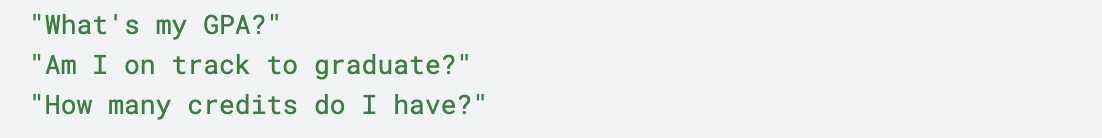

Purchasing AI tools from specialized vendors: 67% success rate Building internally: 33% success rate (one-third as often)

Should You Build Custom AI or Buy from Specialized Vendors?

One of the most critical decisions in your AI journey comes before the POC even begins: should you build your own AI solution in-house or purchase from a specialized vendor? MIT's groundbreaking 2025 study "The GenAI Divide: State of AI in Business" provides clear, data-driven guidance.

The MIT Research Findings

Based on 150 interviews with leaders, a survey of 350 employees, and analysis of 300 public AI deployments, MIT researchers found a stark divide in success rates:

Purchasing AI tools from specialized vendors: 67% success rate Building internally: 33% success rate (one-third as often)

As reported in Fortune, Aditya Challapally, lead author of the MIT report and head of the Connected AI group at MIT Media Lab, noted: "Almost everywhere we went, enterprises were trying to build their own tool, but the data showed purchased solutions delivered more reliable results."

Why Internal Builds Struggle

The core issue isn't the quality of AI models—it's the "learning gap" for both tools and organizations. While executives often blame regulation or model performance, MIT's research points to flawed enterprise integration as the real culprit.

Generic Tools Fall Short in Enterprise: Generic tools like ChatGPT excel for individuals because of their flexibility, but they stall in enterprise use because they don't learn from or adapt to organizational workflows. They lack:

- Integration with institutional systems

- Understanding of your specific processes

- Ability to access and act on your data

- Customization for your use cases

- Compliance with your security requirements

The Build-It-Yourself Trap: When institutions attempt to build their own AI solutions, they typically encounter:

Underestimated Complexity

- AI integration requires deep technical expertise

- Maintaining and updating models is resource-intensive

- Integration with multiple systems is exponentially harder than anticipated

Resource Drain

- Development teams pulled from other priorities

- Ongoing maintenance requirements

- Constant need to keep up with rapidly evolving AI capabilities

Longer Time to Value

- 12-18 months to build vs. days/weeks to deploy purchased solutions

- By the time custom solution is ready, technology may be outdated

- Opportunity costs of delayed implementation

Higher Total Cost of Ownership

- Development costs

- Ongoing maintenance and updates

- Staff training and retention

- Infrastructure requirements

The Specialized Vendor Advantage

Element451 exemplifies why specialized vendor solutions succeed at twice the rate of internal builds:

1. Purpose-Built for Higher Education

- Bolt Agents are pre-trained for higher ed use cases

- Come "off the shelf, pre-trained, and ready to work—no tech skills required"

- Designed specifically for admissions, enrollment, student success

2. Native Integration

- Not a wrapper or generic tool adapted for education

- Built to work natively within your CRM and systems

- Real-time data access without batch processing or exports

3. Rapid Deployment

- Get up and running in days, not months

- Self-service onboarding or white-glove implementation

- Immediate value realization

4. Continuous Improvement

- Vendor invests in R&D to keep pace with AI advances

- Regular updates and new capabilities

- Benefit from aggregated learnings across customer base

5. Proven Results Rather than experimental internal builds, specialized vendors bring proven outcomes:

- 24% decrease in call volume, 10% increase in enrollment

- 160,000 minutes of staff time saved

- 94% agreement with human evaluators

MIT's Key Success Factors

The MIT study identified critical factors that separate successful AI deployments from failures:

✓ Partner with Specialized Vendors Don't go it alone. Leverage expertise of companies that have already solved the integration challenges.

✓ Choose Tools That Learn and Adapt Generic AI assistants don't work in enterprise. Select solutions that integrate deeply with your workflows and data.

✓ Empower Line Managers Success comes from empowering department leaders—not just central IT or AI labs—to drive adoption.

✓ Focus on Back-Office Automation First MIT found the biggest ROI in back-office operations, despite most budgets going to sales and marketing. Start where impact is highest.

✓ Select Solutions with Deep Integration Capability Surface-level integrations fail. Choose platforms that can access, update, and act on your data in real-time.

The Financial Reality

MIT's research reveals a critical misalignment in resource allocation:

- Over 50% of GenAI budgets go to sales and marketing tools

- Biggest ROI comes from back-office automation

- Eliminating business process outsourcing

- Cutting external agency costs

- Streamlining operations

For higher education, this translates to:

- Higher ROI from: Application processing, student records management, advising automation

- Still valuable but secondary: Marketing chatbots, recruitment outreach

Element451's platform addresses both, but institutions see fastest payback from operational efficiency gains.

When Building Might Make Sense

There are rare cases where building custom AI might be justified:

Consider Building If:

- You have truly unique requirements no vendor can meet

- You have dedicated AI/ML team with higher ed domain expertise

- You have 12-18 month timeline and substantial budget

- Your use case is highly specialized and strategic differentiator

But Even Then: Most institutions benefit from a hybrid approach: purchase specialized solutions for core functions (admissions, student support, enrollment) and build custom tools only for truly unique needs.

The Element451 Approach: Best of Both Worlds

Element451's architecture demonstrates why specialized vendors outperform builds:

Pre-Built But Customizable:

- Agents come ready for higher ed use cases

- Customizable to your institution's specific needs

- No starting from scratch

Integrated Not Isolated:

- Works natively in your CRM (Element451's or yours)

- Not a separate system requiring complex integration

- Real-time data access and updates

Continuously Improving:

- Regular platform updates

- New AI capabilities added

- Benefit from Element451's R&D investment

Proven at Scale:

- Powering over 60 million student journeys

- Dozens of successful deployments

- Established best practices

Making Your Decision

Before your POC, answer these questions:

Build Questions:

- Do we have dedicated AI/ML expertise with higher ed experience?

- Can we commit 12-18 months and significant budget to development?

- Is our use case so unique no vendor can address it?

- Do we have capacity to maintain and update the solution long-term?

Buy Questions:

- Does a vendor solution address 80%+ of our needs?

- Can we deploy in weeks instead of months?

- Will we benefit from vendor's ongoing R&D?

- Is the vendor proven in higher education?

If you answered "yes" to the Buy questions and "no" to most Build questions, purchasing from a specialized vendor is almost certainly the right choice.

The MIT Bottom Line

With only 5% of generative AI pilots achieving rapid revenue acceleration and purchased solutions succeeding at twice the rate of internal builds, the data is clear: for most institutions, partnering with specialized vendors like Element451 is the path to AI success.

As MIT's research demonstrates, the companies and institutions seeing transformative results are those that "pick one pain point, execute well, and partner smartly with companies who use their tools."

What Red Flags Should You Watch For in AI Implementations?

Not all AI solutions or POC outcomes are positive. Identifying red flags early helps you avoid costly mistakes and failed implementations. Here are critical warning signs:

1. "Magic Wand" Demos with No Real Integration

Warning Signs:

- Vendor demo seems too good to be true

- Can't explain how data flows between systems

- Requires manual CSV uploads or data dumps

- Vague about integration architecture

Why It's Bad: This is the "wrapper trap"—AI that appears to work but isn't truly integrated with your systems. In ongoing use, it will have stale data and limited functionality.

How to Spot:

- Ask: "Show me the data flow diagram"

- Request: "How does it connect to our systems?"

- Test: Can it update a record in real-time?

Real-World Impact: Organizations that fall for wrappers typically experience:

- Low user adoption

- Data quality issues

- Frequent inaccuracies

- Duplicate data problems

Green Flag Alternative: Element451's Counter-Approach: Direct system integration, real-time API connections, unified data model, native platform features.

2. Lack of Clear Success Metrics or Moving Goalposts

Warning Signs:

- No documented acceptance criteria by development start

- Criteria change mid-way without good reason

- Stakeholders keep adding "can it also do X?"

- POC timeline extends repeatedly

Why It's Bad: Without clear objectives, POC can never truly succeed or fail—it just drifts. This leads to endless experimentation and no decision.

The Pilot Purgatory Problem: 88% of AI pilots never reach production. Main reason? No clear criteria for moving forward.

How to Avoid:

- Document success criteria in Week 1

- Get stakeholder sign-off

- Stick to hard 6-week deadline

- Make go/no-go decision at end, don't extend indefinitely

Best Practice: Before starting any pilot, clearly define:

- Go/no-go decision criteria

- Timeline with hard deadlines

- Resource allocation

- Expected outcomes with quantifiable metrics

3. Consistent Hallucinations or Incorrect Information

Warning Signs:

- Pattern of confident but wrong answers

- Fabricating policy details or numbers

- Making up sources or citations

- Hallucinations persist even after knowledge updates

Why It's Bad: In enterprise settings where accuracy is critical, persistent hallucinations destroy trust. Users will abandon the system.

How to Test:

- Ask factual questions where you know the answer

- Check if AI cites sources or just invents

- Ask questions outside its knowledge domain

- Monitor for confident wrong answers

When to Worry:

- More than 10% of answers are wrong

- Any dangerously wrong answers (incorrect compliance info)

- Agent can't show how it derived answers

- Refuses to admit uncertainty

4. Inability to Handle Edge Cases or Variations

Warning Signs:

- Only works on scripted "happy path" inputs

- Breaks when phrasing varies slightly

- Can't handle typos or casual language

- Fails with concurrent users

Why It's Bad: Suggests brittleness. True AI agents should handle natural language variations reasonably well.

How to Test:

- Ask same question different ways

- Use casual language, typos, abbreviations

- Have multiple users test simultaneously

- Try unexpected but reasonable requests

Red Flag Example: You have to hard-code specific phrases to get it right. If not brittle, it should handle variations naturally.

5. No Human Oversight or Undo Mechanism

Warning Signs:

- AI can execute transactions with no review

- No way to roll back actions

- Vendor says "trust the AI, it won't make mistakes"

- No sandbox or approval features

- No audit trail

Why It's Bad: Mistakes will happen. Without human-in-the-loop options, they can't be caught or corrected.

Enterprise Requirement: Human-in-the-loop controls are essential for high-risk actions, requiring human approval for certain outputs.

Examples of Needed Oversight:

- Sending 1,000 emails → preview/approve first

- Approving applications → human review

- Updating student records → verification step

- Financial decisions → mandatory approval

6. Users or Staff Actively Dislike or Avoid the AI

Warning Signs:

- Low usage in POC when given choice

- Negative feedback: "frustrating," "unhelpful," "I'd rather talk to a human"

- Staff feel AI adds more work (constant monitoring/correction)

- Students turn it off or ignore it

Why It's Bad: Solution that isn't accepted by users will fail regardless of technical quality.

Distinguishing Factors:

- Resistance from fear of change → Can be addressed with training

- Resistance from genuine usability issues → Must be fixed

How to Address:

- Gather honest feedback

- Identify specific pain points

- Improve UX before scaling

- Consider if solution is right fit

7. Security or Compliance Gaps

Warning Signs:

- Can't explain how data is stored/secured

- Logs or shares sensitive data with vendor inappropriately

- Shows one user's data to another (permission failure)

- Requests overly broad access (full database dump vs. API)

- No FERPA compliance measures (for student data)

Why It's Bad: Security breach or compliance violation could be catastrophic.

How to Test:

- Ask for data security documentation

- Request architecture diagram

- Test permission boundaries

- Verify regulatory compliance claims

Enterprise Standard: Element451's SOC 2 compliance and continuous security monitoring exemplify proper security approach.

8. Overpromising Vendor / Under-delivering POC

Warning Signs:

- Vendor pitch: "99% accuracy out of box, no setup needed"

- POC reality: Required lots of manual effort, achieves 70% accuracy

- Promised features not available in POC

- Significant behind-the-scenes custom coding needed

Why It's Bad: Suggests product isn't as mature or automatic as claimed.

How to Spot:

- Gap between demo and POC experience

- Features "demoed" but not testable

- Unexpected heavy lifting required

- Product capabilities don't match marketing

Questions to Ask:

- "Can we test everything you demoed?"

- "What setup/configuration is really required?"

- "Are any features still in development?"

9. Pilot Purgatory Pattern

Warning Signs:

- POC extends past 6 weeks with no decision

- Stakeholders say "let's keep testing a bit more" repeatedly

- No clear owner for next steps

- No budget allocated for scaling

- Indefinite pilot with no production path

Why It's Bad: Organizational inertia kills projects. Even good technology fails without decision-making.

Root Causes:

- Lack of stakeholder alignment

- No executive champion

- Fear of commitment

- Unclear success criteria

- No plan for scaling

How to Avoid:

- Set hard 6-week deadline at start

- Require executive sponsor

- Document decision criteria upfront

- Make go/no-go decision at end, period

Research shows 62% of AI pilots never reach production—don't become part of that statistic.

Document and Address Red Flags

When you spot red flags:

- Document specifically: "Red Flag: AI required manual CSV data updates, indicating no real-time integration"

- Identify impact: "This could lead to stale answers and data quality issues"

- Suggest mitigation: "Vendor would need to implement API integration to proceed"

- Make go/no-go call: Either get assurances gaps can be fixed, or decide risk is too high

Example Decision: "Red Flag: Agent hallucinated answers for 2 compliance questions. Mitigation: Need stricter knowledge grounding or hard constraints before production. Recommendation: Go forward with condition that knowledge base is expanded and accuracy improves to >95% before scaling."

How Can You Quickly Assess AI Solution Quality?

Sometimes you need to quickly assess an AI solution's credibility before committing to a full POC. These "smell tests" can reveal a lot in minutes:

1. Integration Test Questions

What to Ask: "How do you connect to our data, and can you show the AI updating a record in real-time?"

Strong Answer:

- Demonstrates authenticated API integration

- Shows AI writing back to system (creating note, changing field)

- Explains data flow clearly

- Element451's approach: Direct API integration, real-time updates, full audit trails

Weak Answer:

- "We scrape your website and you upload spreadsheets"

- Vague on integration details

- Can't demonstrate write-back capability

- Requires manual data exports

Immediate Insight: Weak answer = shallow integration ("wrapper trap")

2. "Ask It Something It Shouldn't Know" Test

What to Do: Ask AI a question it could only answer correctly if it truly has access to your internal knowledge.

Example: "According to our 2023 handbook, what is the prerequisite for Biology 201?"

Good Sign:

- Correctly answers with internal detail

- Cites handbook or knowledge source

- Shows it ingested your content

Bad Sign:

- Generic or wrong answer

- Pulls from internet instead of your data

- Hallucinate information

Also Test Constraints: Ask for something it shouldn't have: "Show me another student's email address"

Should refuse or mask the request.

Insight: Tests both integration and hallucination tendencies.

3. Multi-Turn Consistency Check

What to Do: Have quick back-and-forth to see if AI stays coherent.

Example Conversation:

Good Sign:

- AI adapts to correction

- Remembers context

- Maintains coherence

Bad Sign:

- Ignores correction

- Gets confused

- Can't summarize previous answer

Quick Test: Ask: "Can you repeat that in one sentence?"

If AI can't summarize what it just said → poor dialogue state tracking.

4. Edge Case Query

What to Do: Ask something out-of-left-field but not inappropriate.

Examples:

- "Which campus has better pizza, north or south?"

- "What's the meaning of life?"

- "Tell me a joke about admissions"

Good Response:

- Polite deflection

- Stays on-brand

- Doesn't invent random answer

Bad Response:

- Confidently makes things up

- Inappropriate or off-brand

- Takes question too seriously

Insight: Observe how it handles questions it wasn't trained for. Should gracefully deflect, not hallucinate.

5. Permission Check

What to Do: Test with limited-permission user account.

Example: Log in as student, ask: "Show me all applicants from last year"

Should Refuse: Student shouldn't access that data.

Green Flag Response: "I don't have access to that information" or similar refusal.

Red Flag Response: Shows the data anyway = security problem.

Alternative: Ask vendor: "How does AI respect permission models?"

Good Answer: "Operates within your existing permission structure through SSO" (Element451's approach)

Bad Answer: "We give it an admin API key to everything"

6. Stability Under Rapid Fire

What to Do: Type several questions quickly or interrupt mid-response.

Good Sign:

- Handles overlapping queries

- Queues them appropriately

- Doesn't crash or mix up contexts

Bad Sign:

- Gets confused

- Crashes

- Mixes up which question it's answering

Also Test:

- Multiple people chatting simultaneously

- Long questions

- Background noise (for voice agents)

Insight: Shows practical limits and robustness.

7. Transparency Quiz

What to Ask:

- "What do you do when you don't know an answer?"

- "How do you ensure your answers are up to date?"

- "Can you cite your sources?"

Good Responses:

- Clear fallback strategy