How AI Agents Change Application Review (for the Better)

by Eric Range · Sep 04, 2025

When I worked in admissions at Drew University, I lived the same reality many teams face today: application surges, long nights, and the constant fear that we’d miss something important in the pile. If I’d had a time machine, I’d bring Bolt Agents back with me. Not to replace people—but to give every reader an AI teammate that never gets tired, reads consistently, and flags risk before it reaches our desks. And of course, I saw the same challenges across campuses when I jumped to the other side of the desk into enrollment and tech consulting, too

Why speed and fairness can finally coexist

Backlogs aren’t just stressful—they’re consequential. Students wait weeks for decisions. Staff morale dips. And fraud has become a tax on the entire process. In California’s community colleges, roughly a third of applications were flagged as fraudulent in 2024, with $10–11M+ in aid siphoned off—a signal of what’s coming for everyone else. The Department of Education even resumed specific FAFSA fraud flags this year.

At the same time, the flood of fake or low-quality files creates equity problems. Real students get stuck behind bots. Review quality gets inconsistent as fatigue sets in. And on a bad day, one Maryland college saw 80 fake apps in a couple of hours.

What changes with agents (and what your people do next)

Here’s the shift I wish I’d had:

- First reads in minutes. Agents complete the initial read, apply a rubric, and route to the right queue—fast. We routinely see first reads in <5 minutes, 95%+ alignment with human scoring, and ~250 hours saved per 5,000 apps. Staff time moves from slog to judgment.

- Fraud gets paused, not passed through. Agents insert a “fraud-check pause” on suspicious files so humans review what matters most—real applicants. (A community college leader noted that adding this pause reduced false entries and increased yield.)

- Readers shift to higher-value work. Calibrate rubrics. Handle edge cases. Contextualize nuance (portfolio, life story, essay). Call admits quickly. Meet families. Morale rises because the job looks like the job you signed up for.

If you’re thinking, “But what will my readers do if agents do the reading?”—this is the answer. They’ll do the human things that actually move yield and equity: deeper evaluations, faster decisions, and building connections with students and families via proactive outreach.

“Agentic” isn’t a switch—it’s a manager’s mindset

Moving to agents isn’t “flip it on and walk away.” It’s a partnership:

- Start with training wheels. Set 100% human approval of agent actions on day one, then taper as confidence grows. (Yes, you can dial autonomy and guardrails per job.)

- Co-own the rubric. Your best readers encode what “good” looks like. The agent applies it consistently; you review the deltas and tune.

- Narrate the change. People aren’t worried about work changing—they’re worried about worth. Be explicit: agents remove repetitive tasks so staff can do relationship and judgment work at the top of their license.

- Measure what matters. Track time-to-first-read, human-AI score alignment, fraud-pause accuracy, and decision speed by segment. Celebrate wins publicly; tune miss rates privately.

Practical steps (the first 30–45 days)

Borrowed from our agent playbooks and tuned for Application Reading:

- Define the outcome + queues. Agree on the “north star”: faster, fair decisions. Create queues: Auto-Admit, Human-Review, Fraud-Pause, Auto-Deny (policy-based).

- Encode a minimal viable rubric. Keep it simple at first: required docs present, GPA/credit thresholds, program fit markers, and any must-deny triggers (e.g., identity mismatch).

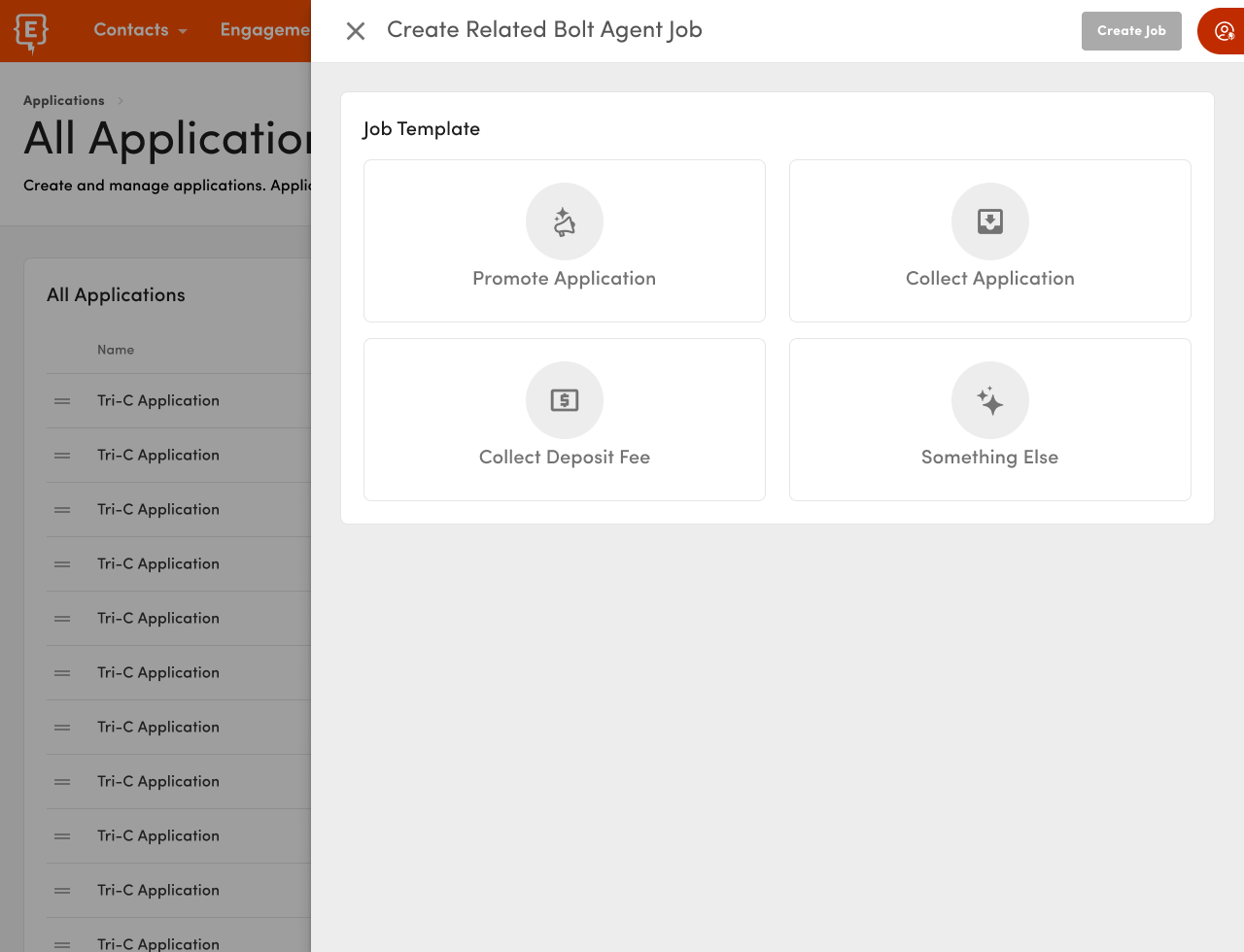

- Onboard your Admissions Agent with skills (read, score, route, message) and permissions (approval required on certain actions). Start Jobs that clarify goals like “Score & Route” or “Submit Missing Documents.”

- Run a 50-file calibration. Agent reads; two humans blind-score. Compare alignment. Tighten the rubric where variance is high; expand autonomy where it’s high-confidence. (Expect >90% alignment quickly.)

- Activate a fraud-check pause. Pair lightweight ID verification and anomaly rules (IP/device, velocity, duplicate data). Review paused files daily; document false positives and tune thresholds.

- Close the loop with students. Let agents handle document chases and status nudges automatically so counselors spend their time on admits and edge cases.

- Publish the working agreement. One page. What agents do. What humans own. When to escalate. How approvals work now vs. when autonomy expands. (This reduces “am I being replaced?” anxiety.)

But will this actually move the numbers?

Early adopters show it does. Teams are cutting processing time dramatically (one partner described moment-to-admit decisions when criteria are met) and boosting yield while filtering out fraudulent submissions. And across implementations we see the pattern: faster first reads, fewer manual hours, better consistency.

The macro environment supports moving now. Fraud is up (and getting more sophisticated), and federal controls are tightening. Waiting won’t make next cycle easier.

A final word to admissions leaders

Agentic admissions isn’t about cutting headcount. It’s about protecting your team’s attention for the decisions and relationships only humans can make—and giving students timely, fair outcomes.

Want the step-by-step? We’ve published a practical playbook on standing up AI agents (skills, approvals, jobs, measurement). Start there and send me your questions.

👉 For a step-by-step guide, see our playbook: Application First Reads with AI Agents.

About Element451

Boost enrollment, improve engagement, and support students with an AI workforce built for higher ed. Element451 makes personalization scalable and success repeatable.

Categories

New Blog Posts

The Definitive Guide

AI in Higher Education

Bridge the gap between the latest tech advancements and your institution's success.

Useful Links

Related Articles

Talk With Us

Element451 is the only AI Workforce Platform for higher education. Our friendly experts are here to help you explore how Element451 can improve outcomes for your school.

Get a Demo